What is bot traffic, and how to stop traffic bots?

Sanja Trajcheva

|Cyber Risks & Threats | July 10, 2021

Bot traffic is essentially non-human traffic to a website. Bots are used extensively by online services to collect data from the internet and to enhance our user experience.

Your search results on Google would be more like Alta-Vista or AOL if it wasn’t for bots (if you’re old enough to get those references, you’ll remember that search results pre-Google were pretty rubbish).

In fact, all of those automated website traffic bots are designed to make our lives much easier. And for the most part, they do.

What are bots?

An internet bot is a piece of code that performs a task or a number of tasks. Often hosted on a computer server or data center, bots are often tasked with performing repetitive tasks or collecting huge amounts of data relatively quickly.

Although the image of a robot or crazy scuttling robo-beetle running around the internet is quite cool, the truth is it’s just an algorithm.

The program runs, searches the internet, and delivers the required result usually, in a fraction of a second.

Anyone can create an internet traffic bot – in fact, it’s the ease of making them that causes some problems. Even relatively inexperienced web coders can program a simple bot with a little bit of study.

Although AI and machine learning are accelerating rapidly, at the moment, these bots are not sentient. They simply do what they are programmed to do.

Heavy lifting bots

With the ability to perform repetitive tasks quickly, traffic bots can be used for both good and bad.

“Good” bots can, for example, check websites to ensure that all links work, collect useful data such as search rankings, or analyze site performance.

“Bad” bots, on the other hand, can be unleashed to infiltrate websites to steal data, spread viruses, or overload servers with denial of service (DDoS) attacks.

For most end users, like browsers of websites, bot traffic isn’t really an issue.

But for site owners, bot traffic is critical, whether it’s to ensure that Google is crawling your site properly, to enhance the accuracy of your analytics results, to ensure the health and performance of your website, or to prevent malicious behavior on your website and ads.

The fact is that more than half of all web traffic is bot traffic. What’s disturbing, however, is that 28.9% of all traffic is thought to be from malicious sources. To understand how this kind of website bot traffic can be damaging, we’ll need to take a closer look at the internet traffic out there…

Different types of bot traffic

As we’ve mentioned, there are good and bad types of website bot traffic. One thing to remember is that internet traffic bots are a very diverse bunch.

On one hand, we have complex scripts developed by companies to collect a wide array of data. On the other, we have simple programs that perform one or two simple tasks. And we also have those annoying and malicious programs like spam bots or form-filling bots.

“Good Bots”

- SEO: Search engine crawler bots crawl, catalog, and index web pages, and the results are used by search providers like Google to provide their service

- Website Monitoring: These bots monitor websites and website health for issues like loading times, downtimes, and so on

- Aggregation: These bots gather information from various websites or parts of a website and collate them into one place

- Scraping: Within this category, there are both “good” and “bad” bots. These bots “scrape” or “lift” information from websites, for example, phone numbers and email addresses. Scraping (when legal, of course) can be used for research, for example, but can also be used to illegally copy information or for spamming

“Bad Bots”

- Spam: Spam bots are used for spreading content, often within the “comments” section of websites, or to send you those phishing emails from Nigerian Princes

- DDoS: Complex bots can be used to take down your site with a denial of service attack – often a coordinated attack

- Ad Fraud: Bots can be used to click on your ads automatically, often used together with fraudulent websites to boost the payout for ad clicks – there is a rich history of ad clicker bots out there

- Ransomware and other malicious attacks: Bots can be used to unleash all kinds of havoc, including ransomware attacks which are used to encrypt devices – often in exchange for a payout to ‘unlock’ them

Read more here about the different types of cyber crime.

How to detect bot traffic?

Detecting bot traffic is the first step in ensuring that you’re getting all the benefits of the good bots (like appearing in Google’s search results) while preventing the bad bots from affecting your business.

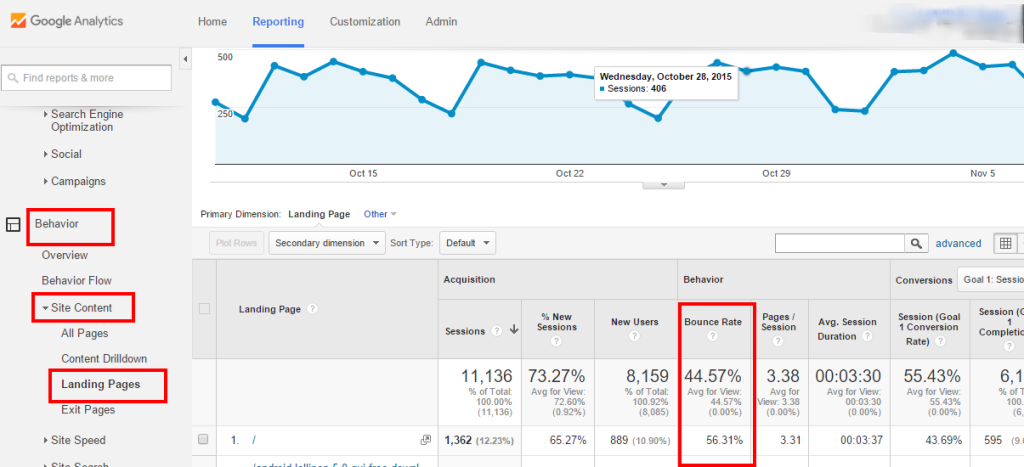

When figuring out how to detect bot traffic, the best place to start is with Google Analytics.

If you have wondered to yourself, “Can I see bot traffic in my Google Analytics account?” the answer is: Yes. You can definitely get an indication of it.

You need to know what to look out for, and you’ll be able to get an indication of bot traffic, but you may not find a smoking gun.

The key ratios to keep track of here are:

- Bounce Rate

- Page Views

- Page Load Metrics

- Avg Session Duration

The bounce rate is expressed as a percentage and shows visitors of your website who navigate away from the site after viewing only one page. Humans are most likely to arrive on your site (from a search engine result, for example) and then click through to explore your offering. A bot isn’t interested in exploring your site, so it will “hit” one page and leave. A high bounce rate is a great indicator of bot traffic detected.

Page Views are almost the reverse of this. The average visitor might visit a few pages on your site and then move on. If you suddenly see traffic where 50 or 60 pages are being viewed, this is most likely not human traffic.

Slow site load metrics. This is also really important to monitor. If load times suddenly slow down and your site is feeling sluggish, this could indicate a jump in bot traffic or even a DDoS (Distributed Denial of Service) attack using bots. A tech solution might be required in some cases (more about this below), but this is a good first step in how to detect bots.

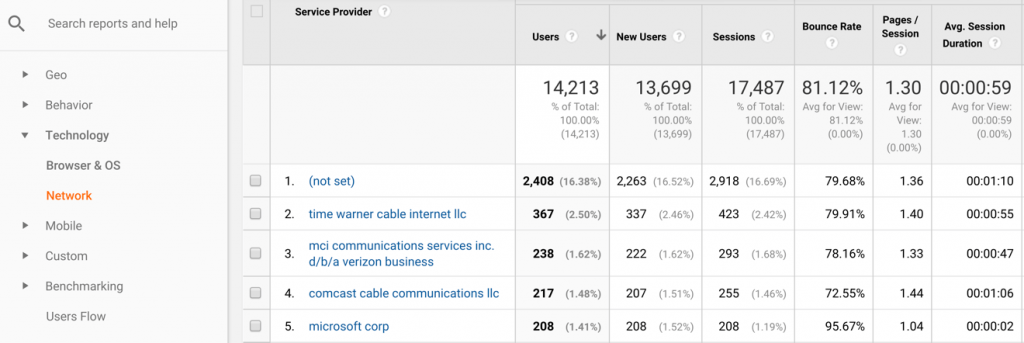

Avg. Session duration will tell you a lot about how users from different sources are interacting with the site. In the image below, the Microsoft Corp Network is most likely bringing non-human traffic. Two seconds is classic for bot clicks.

How To Stop Bots From Crawling My Site

There are different reasons why some people might want to stop bots from crawling their sites. For some, it might be simply guarding gated content; for others, it might be preventing hackers from accessing databases. Luckily protecting sections of your website from internet traffic isn’t too tricky – in theory, at least.

Your first stop is your robots.txt file. This is a simple text file that gives guidelines to bots visiting your page in terms of what they can and can’t do. Without a robots.txt file, any bot will be able to visit your page. You can also set up your file so that no bots can visit your page (although see the warning above).

The “middle ground” is to put rules in place, and the good news is that the “good” bots will abide by these. The bad news, however, is that the “bad” bots will disregard these rules entirely.

When it comes to the “bad” bots, you’ll need to engage a tech solution. This is where a CDN (Content Delivery Network) service comes in. One of the advantages of a good CDN is the protection it can provide against malicious bots and DDoS attacks. Some of the most common ones are Cloudflare and Akamai, which can stop some bots from crawling sites. As Cloudflare themselves say, “Cloudflare’s data sources will help reduce the number of bad bots and crawlers hitting your site automatically (not all).”

There are also purpose-built anti-bot solutions that can be installed, but it’s important to note that most of these can protect your website relatively well but cannot protect you outside of that – for example, your ads on search engines and other properties.

Another more tedious (and less effective) option is to manually block IPs where you know that the traffic is bot-related. A trick you can use is to check the geographic origin of the traffic. If your traffic is usually from the US and Europe, and suddenly you’re seeing a lot of IPs from the Philippines, it could be a bot or click farm.

Why is it important to protect your ads?

One of the biggest threats to your ad campaigns, and by extension to the future of your business, is bot traffic. CHEQ and the University of Baltimore’s economics department showed that even opportunistic bots are set to cost businesses $35 billion in 2021.

Bots can be programmed to click on your ads, leaving chaos in their wake: for example, by draining your Google Ads account, causing Google to rate your ad’s performance as poor, by stopping your ad from being displayed while competitors’ ads are featured prominently, and by impacting conversion rates and rendering your analytics meaningless.

In today’s digital advertising industry, bots are both a huge help and have the potential to be very damaging. Taking a proactive approach to PPC protection is the only way to ensure that your ad campaigns are safe. All ad managers should consult with third-party software to determine how their traffic is being affected by bot activity.

Take back control and block traffic bots, improve your website traffic quality, win more real customers, and stop wasting money.

CHEQ Essentials is the industry-leading click fraud prevention software, highly rated by marketing professionals and business owners. To block fraud on your PPC ads, including bot traffic, sign up for your free trial of CHEQ Essentials.

Get Your Ads Protected from Bot Traffic Now With a Free Trial