It was supposed to make it harder for organised click fraud, with websites authorised to host display ads via ads.txt. As one of the main channels for the monetisation of click fraud spoofed websites have always been a big problem for marketers; so eliminating them should have been the panacea of sorts.

Methbot and 3ve, two of the most infamous click fraud campaigns highlighted the issue, with thousands of websites spoofed wholesale.

Unveiled in 2017, around the time that the huge 3ve campaign was at its peak, ads.txt was a simple idea designed to iron out these wrinkles in the system. But here we are in 2021, and we’re still seeing ad fraud using spoofed websites.

So what is going on, and what is the future for ads.txt?

Before we get into the nitty gritty, we’ll explain very quickly what is ads.txt?

Ads.txt explained

Ads.txt is a simple text file, used by publishing websites, which lists authorised vendors who can display ads on their site.

The theory goes that by having a list of vendors on this list, fraudulent parties could not spoof a website to host ads without consent. It also gives advertisers confidence that a publisher is genuine and offers some transparency around which ad platforms are using the site.

The ads stands for both Authorized Digital Sellers and advertising. Not just a clever name.

And the .txt bit simply shows you that this is a text file, the kind you can open and edit in your notebook app.

This text file is simply added to a publisher’s root domain and is then used by the advertising platforms including to verify inventory and publisher relationships. This inventory can include individual ad exchanges such as Rubicon Project, native ads providers like Taboola and the behemoths of Google and Facebook Ads.

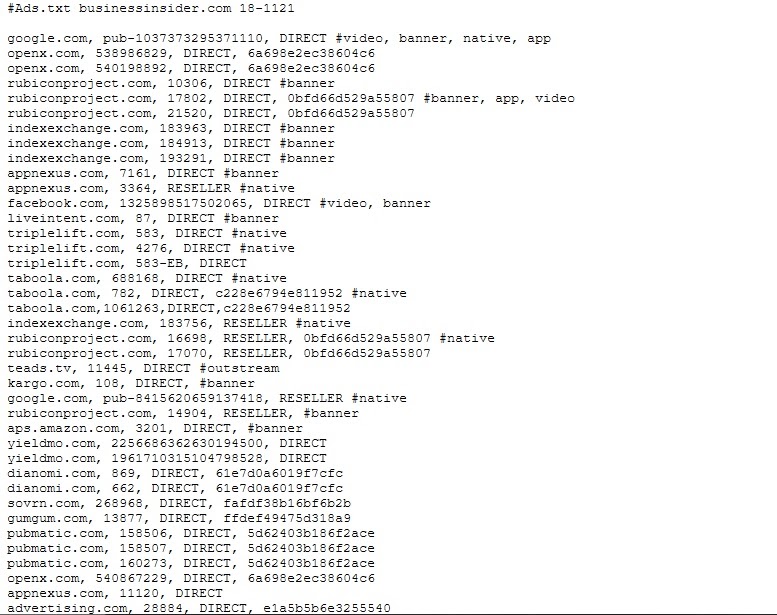

The format used on every ads.txt file follows the format of a domain name, seller ID (publishing number), relationship, and ad formats.

There is also an optional certificate ID issued by the Trustworthy Accountability Group (TAG)

WEBSITENAME.com, 123456, Direct, (TAG number ) #banner, native, video

A typical ads.txt file looks like this one, which is from BusinessInsider.com.

You can check any website’s ads.txt just by typing in their domain name with an appended /ads.txt at the end.

Any publisher can use ads.txt, and in fact many PPC and content syndication platforms make it a requirement to add an ads.txt file, prior to running ads.

The development of ads.txt

Developed by IAB Tech Labs (Interactive Advertising Bureau), the program has worked well and even progressed to adding an inventory for mobile advertising publishers too; known as apps-ads.txt. This allows certain apps and app vendors to monetise their platforms and to build trust between publishers and advertisers.

It’s a simple solution to a big problem, and one that has been slowly embraced by the online advertising community. But, the program has not been without it’s teething problems with resourceful programmers finding ways to exploit ads.txt.

One early problem was that fraudulent sites would simply add an ads.txt file to their domain, which advertisers would simply take as proof that the site was genuine. However, the ads.txt file says nothing about the quality of traffic, or even about the quality of the website itself.

And there are some other more sinister problems at play too.

404 Bot and ads.txt

By their own admission, IAB never intended ads.txt to totally eliminate ad fraud, but to offer a level of transparency between publishers and advertisers.

There have also been some exploits of the system, which have slightly undermined the intention of the initiative. Perhaps the most damaging is the 404Bot which is thought to have made at least $15 million for some fraud group out there since it began in 2018.

404Bot works by targeting sites with a large inventory of ads.txt vendors, usually outdated lists, and then spoofing these sites to generate fake video impressions. It’s a bit complicated, so here’s the ‘explain like I’m 5 version’.

Site A and site B both have ads.txt lists that are very long and haven’t been updated for a while. 404 Bot takes a look at the ads.txt lists from both of these sites and generates a spoofed page.

Consider the two following (made up, obviously) websites:

Myblogsite.com/my-favourite-cat-videos

Newswebsite.com/big-events-in-London-2020

The 404Bot will create a composite of these which are very hard for your human eye to spot, and will, to all intents and purposes, look like genuine inventory.

For example, a fake domain based on the two sites above would look like:

Myblogsite.com/big-events-in-London-2020

Switching the page name to an alternative domain name is simple yet effective. As the spoofed page doesn’t actually exist, anyone who visits this page will simply see a 404 notification telling them that the page is not there.

However, the spoofed page is manifested on a server with an ad embedded on it and is then viewed by a bot, thought to be powered by the Bunitu trojan.

So by using a real domain name but a fake page, the developers of 404Bot have found a flaw in the ads.txt framework, and one that looks tricky to fix.

Reseller confusion

Reselling display ads is big business. For example AppNexus, OpenX, MoPub and RubiconProject have grown to become advertising giants by helping businesses to maximise their brand visibility and enabling site owners to monetise their sites.

Of course, there are plenty of smaller advertising agencies doing much the same thing. But, here is where the confusion starts and where another flaw with ads.txt is revealed.

Big brands are happy to use resellers, with many using multiple companies to sell their ads across a huge online portfolio.

As an example, CNN has 15 resellers listed on their ads.txt file, and ESPN has 207. Getting those ads seen by as many eyes as possible means higher conversions, in theory. So, if you have the budget like ESPN does, why not throw money at it?

One issue with this is that some smaller resellers have been reaching out to publishers directly to ask to be put on their ads.txt lists. Usually the reason given is to build new relationships with publishers. But another is to side step working with the bigger platforms like OpenX or RubiconProject.

Industry sentiment suggests that some of these smaller resellers are trying to game the system, often by asking for additional vendors to be included in the list. Many of these additional vendors are genuine publishers in their network, but others are sometimes associated with shady practices.

These practices might include unauthorised reselling of ads, using low quality video players (such as low resolutions) or using fake traffic to inflate views.

Maintaining your ads.txt

Another problem with ads.txt is that publishers don’t always keep their lists up to date, with platforms added but never removed. This adds another layer of potential exposure to fraud, with expired domains easily hijacked, or fraudulent publishers left to their own devices.

In fact, the bigger an ads.txt file becomes, the harder it is to maintain. For small sites, an annual audit of their ads.txt should suffice, but larger publishers or those with a complex network of advertising partners should spring clean regularly.

Industry uncertainty

Although the adoption rate for ads.txt has been pretty solid, with around 75% of major publishers jumping on board, it’s not all plain sailing.

A recent report suggests that ads.txt usage has actually dropped during 2019, from a high of over 80% to just under 75%. When you consider that this reflects on the confidence of the top 1000 websites, it flags up a few questions about the effectiveness of ads.txt.

In fact, the same report also suggests that the actual effect of ads.txt on invalid clicks has been minimal. The difference has been around a 3% prevention in invalid clicks for those using ads.txt as opposed to those not using it. Not a massive difference at all.

So although the majority of major publishers are still using ads.txt, it’s still looking like there is a way to go before those fraud loopholes are closed.

Ads.txt vs click fraud

Although ads.txt is definitely a great idea, and one that has definitely had an impact on organised ad fraud, it isn’t the miracle cure some had hoped for. As we’ve seen, the 404 Bot has compromised those outdated lists and is still a threat as of early 2021.

Ads.txt itself is not a guarantee that you’ll avoid fraud on your display ads, but it’s one of a number of protective processes.

And, ads.txt doesn’t protect against low-quality traffic such as bots and click farms or general click fraud from competitors or malicious actors. It’s designed to prevent organised ad fraud, not day-to-day invalid traffic.

Using ClickCease adds an extra sturdy layer of click fraud protection for peace of mind. The algorithms of specialist click fraud software like ClickCease are designed to block the activities of botnets and suspicious IP addresses.

This means those sneaky bots like 404bot are kept in check and you can keep tabs on any suspicious activity on your PPC campaigns.

Sign up for a diagnostic check with ClickCease, with a free trial to monitor your ad traffic.

You can find out more about click fraud and ad fraud in our in-depth guide